Google’s machine learning software can categorize ramen too!

Google is actively working on improving its machine learning software by making it as smart as the humans. However, the machine learning software’s latest deed may end up proving that they are smarter than the humans themselves. On a related note, if you are a big enough fan of ramen, you can probably look at an image of noodle bowl on Instagram and recognize which restaurant it is from as such. However, Google’s machine learning software has beat the humans at this. The computers, it appears can now identify the exact shop a particular menu item belongs to as such. According to a report published by The Verge, out of over forty-one seemingly identical bowls of ramen from the same restaurant franchise, Google’s machine learning software managed to provide the correct answers for all.

The report by The Verge further adds information about the man behind the research who made this deed possible. The report states that data scientist, Kenji Doi can be credited for carrying out the excellent research through the use of Google’s AutoML vision. Through this system, Kenji Doi began classifying every menu item from Ramen Jiro, which is a Tokyo-based chain of ramen restaurants. Following which, he went on a gather over 1,170 pictures from roughly 41 different shops.

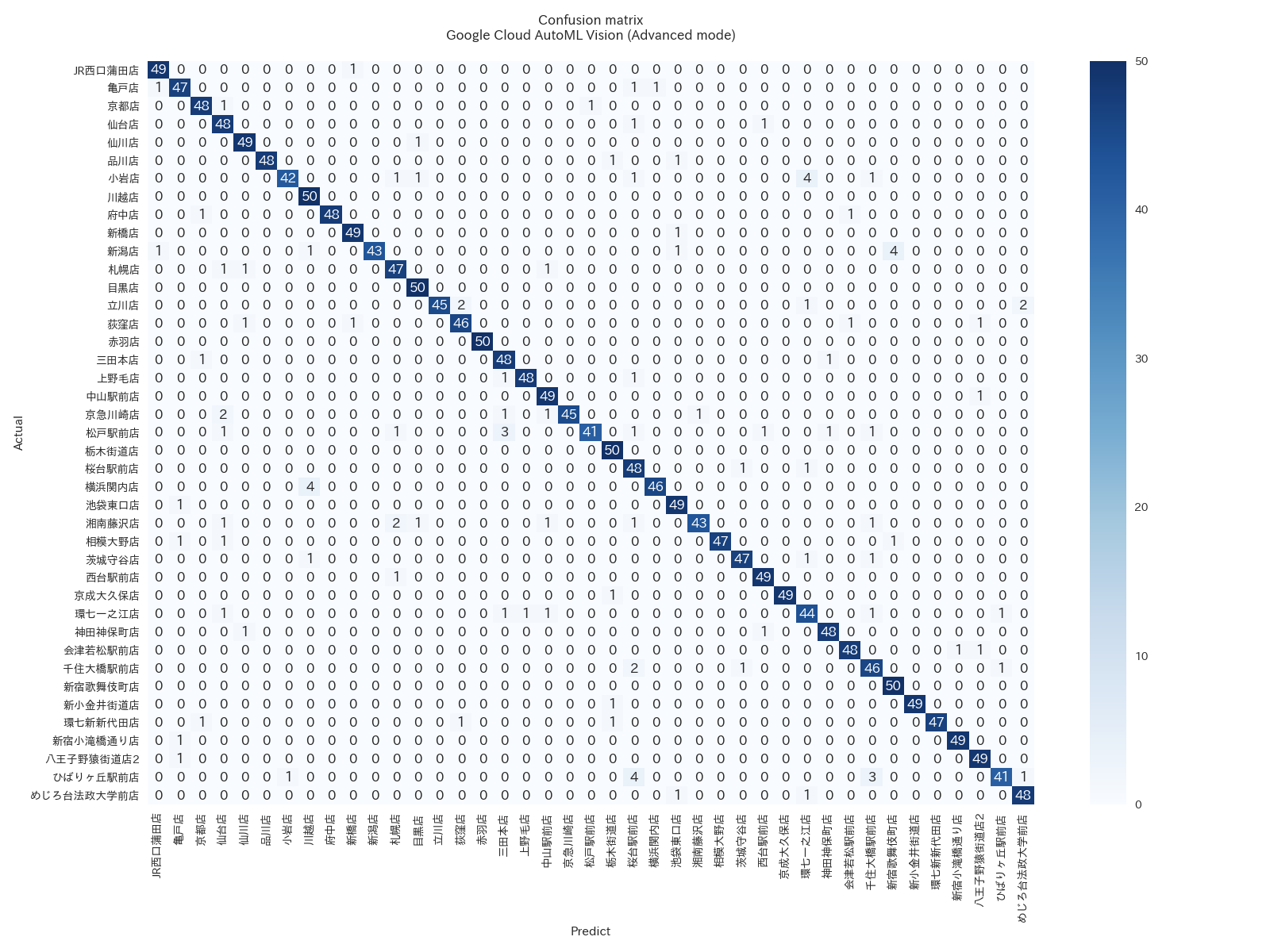

He then went on to feed the dataset of 48,000 ramen photos into the software so that it takes it in and studies the pictures carefully. According to the information provided by Kenji Doi, Google’s machine learning software AutoML took approximately 24 hours (18 minutes in a less accurate Basic mode) to finish training the data. Furthermore, the model was able to predict which shop a particular ramen meal came from with an approximate of 95 percent accuracy.

The report by The Verge further adds that Kenji Doi initially carried out the hypothesis of the model. He was looking at the color and shape of the bowl and the table on which the utensils were keeping. However, this information of the model was not enough for the machine learning software to understand the difference. This method did not work out as the model was able to identify specific ramen shops even from photos with the same bowl and table design. The data scientist then went on to state that the model was accurate enough to be able to differentiate between cuts of meat and the placement of the toppings upon ramen noodles.

As can be recalled, Google launched its Cloud AutoML software for developers earlier this year. The search giant’s primary objective was to ensure that users can create machine learning software through a simple, drag and drop procedure without having to go through any hassles.

The primary aim of the system was to take the pain that goes on while inputting AI codes. Instead of this method, one can merely train custom vision models through an image recognition tool and achieve the same result. There are many companies such as Urban Outfitters and Disney, which are already making use of Google’s AutoML technology. They are making use of the technology to improve its e-commerce shopping experience. “Products are now being categorized into more detailed characteristics to help customers find what they’re looking for,” notes The Verge.

Google undoubtedly created Cloud AutoML by keeping the companies in mind. However, it is not doing very well when it comes to selling products to its customers. However, Doi’s ramen experiment is a nice change from all that and implores us to think more openly about creative use cases for data training. Google has not yet commented on the experiment as yet.