A New Tool Could Protect Artists by Sabotaging AI Image Generators

In 2023, discussions in the technology sector were abuzz with the potential of Artificial Intelligence (AI) systems infringing on the intellectual property of artists. These concerns spanned from instances in the film industry to those involving digital art creations.

The MIT Technology Review, a reputable source on tech developments, has recently spotlighted a novel solution named ‘Nightshade.’ This innovative tool is designed to bolster artists’ defenses against AI misappropriation by allowing them to weave in almost invisible pixels into their creations. These special pixels can disrupt how an AI system learns and processes information.

This introduction of Nightshade comes at a crucial juncture when industry behemoths, such as OpenAI and Meta, find themselves embroiled in legal disputes. These companies are facing accusations of violating copyrights and using artists’ works without appropriate permissions or compensation.

Nightshade is the brainchild of a dedicated team led by Professor Ben Zhao from the University of Chicago. As of now, this promising tool is undergoing the peer review process, a standard step in the scientific community to ensure validity and reliability. The team’s motivation behind developing Nightshade was to restore a degree of control to artists and creators in a landscape increasingly dominated by machine learning. They didn’t just theorize; they put Nightshade to the test. The team ran experiments against contemporary AI architectures known as ‘Stable Diffusion models’ and even tried it on a unique AI system they developed from the ground up.

?Very interesting: protect your online artwork using poisoned data. This looks promising and could kickstart a bigger movement to build tech that can help guard artists from scraping. https://t.co/0jMmKD67tR

— Kyle T Webster (@kyletwebster) October 24, 2023

A new tool lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways. https://t.co/X5wOqua7Ho

— Chris Wysopal (@WeldPond) October 24, 2023

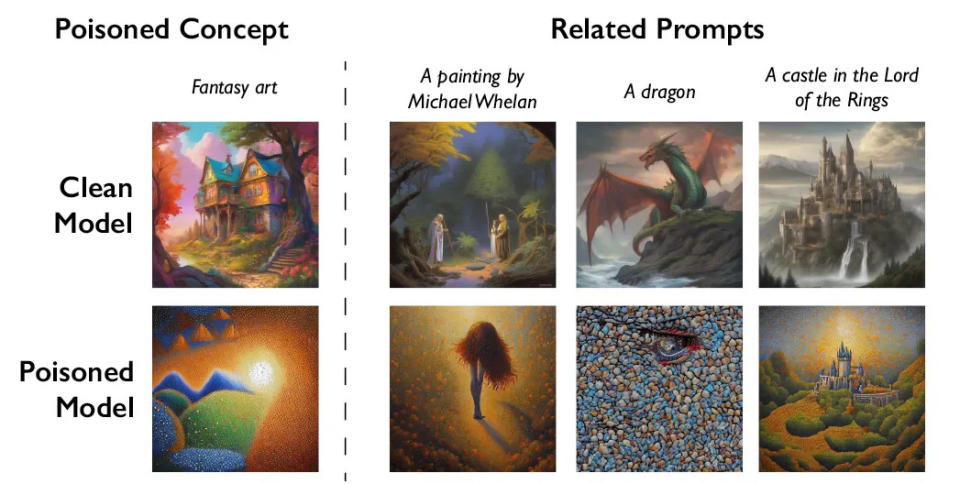

To understand how Nightshade functions, think of it as a digital saboteur. Its main role is to tweak the way machine-learning algorithms interpret and generate content subtly. Here’s a simplified example: imagine an artist creates an image of a purse. If that image is protected by Nightshade and an AI tries to replicate or interpret it, the AI might mistakenly produce or recognize it as a toaster. The AI could also confuse animals, thinking a picture of a cat is actually a dog, and this confusion extends to related terms like interpreting ‘puppy’ for ‘wolf.’

Interestingly, Nightshade isn’t Professor Zhao’s first foray into this domain. Earlier in August, his team introduced ‘Glaze’. Glaze, like its successor, modifies artwork pixels subtly, but it tricks AI into recognizing the modified artwork as a completely different entity. For artists wanting to shield their creations from AI misuse, they can first upload their work to Glaze and then choose the additional protection layer of Nightshade.

The emergence of tools like Nightshade signals a shift in the tech-art landscape. It’s a proactive measure that might push major AI companies to be more ethical, ensuring they seek permissions and offer fair compensation to artists for their works. If companies wish to bypass the hindrances caused by Nightshade, they’re in for a tough challenge. They would need to meticulously comb through and identify each piece of data affected by Nightshade, a task easier said than done.

However, there’s a note of caution from Professor Zhao. He points out that, like any tool, Nightshade can be misused. While its design aims to protect, in the wrong hands and with a large number of corrupted artworks, it could be exploited for malicious purposes.